In this tutorial, we will be training a custom detector for mask detection using YOLOv6 PyTorch implementations

HOW TO BEGIN?

- First, ✅Subscribe to my YouTube channel ???????? https://www.youtube.com/c/techzizou ????????

- Open my Colab notebook on your browser.

- Click on File in the menu bar and click on Save a copy in drive. This will open a copy of my Colab notebook on your browser which you can now use.

- Next, once you have opened the copy of my notebook and are connected to the Google Colab VM, click on Runtime in the menu bar and click on Change runtime type. Select GPU and click on save.

Use any one of the following models:

In this tutorial, we will be using the small yolov6 i.e. YOLOv6s

Follow these 7 steps to train your custom YOLOv6 object detector:

- 1) Mount the drive

- 2) Clone the

YOLOv6official git repository - 3) Navigate to the yolov6 folder, Install PyTorch and all the required libraries and dependencies

- 4) Create & upload the necessary files we need for training a custom detector

- 5) Load Tensorboard to visualize training metrics

- 6) Train the Model

- 7) Test the trained model

NOTE: If you get disconnected or lose your session for some reason you have to run steps 1,3 and 5 again.

1) Mount the drive

#mount drive

%cd ..

from google.colab import drive

drive.mount('/content/gdrive')

# this creates a symbolic link so that now the path /content/gdrive/My\ Drive/ is equal to /mydrive

!ln -s /content/gdrive/My\ Drive/ /mydrive

# list the contents of /mydrive

!ls /mydrive

#Navigate to /mydrive

%cd /mydrive

2) Clone the YOLOv6 official git repository

!git clone https://github.com/meituan/YOLOv6

3) Navigate to the YOLOv6 folder, Install PyTorch and all the required libraries and dependencies

#Navigate to /mydrive/YOLOv6 %cd /mydrive/YOLOv6

!pip install -r requirements.txt

#Check if pytorch installed

import torch

import os

from IPython.display import Image, clear_output # to display images

print(f"Setup complete. Using torch {torch.__version__} ({torch.cuda.get_device_properties(0).name if torch.cuda.is_available() else 'CPU'})")

4) Create & upload the following files which we need for training a custom detector

a. Labeled Custom Dataset

b. process_yolov6.py file (to split dataset into train-val folders for training)

c. data.yaml file

d. config file (custom_yolov6s.py)

e. YOLOv6 pre-trained weights

CHANGE I have uploaded my custom files on GitHub. I am working with 2 classes i.e. with_mask and without_mask

4(a) Upload the Labeled custom dataset obj.zip file to the yolov6 folder on your drive and unzip it

Create the zip file obj.zip from the obj folder containing both the folders’ images and labels. The images folder has all the input images “.jpg” files and the labels folder has their corresponding YOLO format labeled “.txt” files.

Upload the zip file to the YOLOv6 folder on your drive.

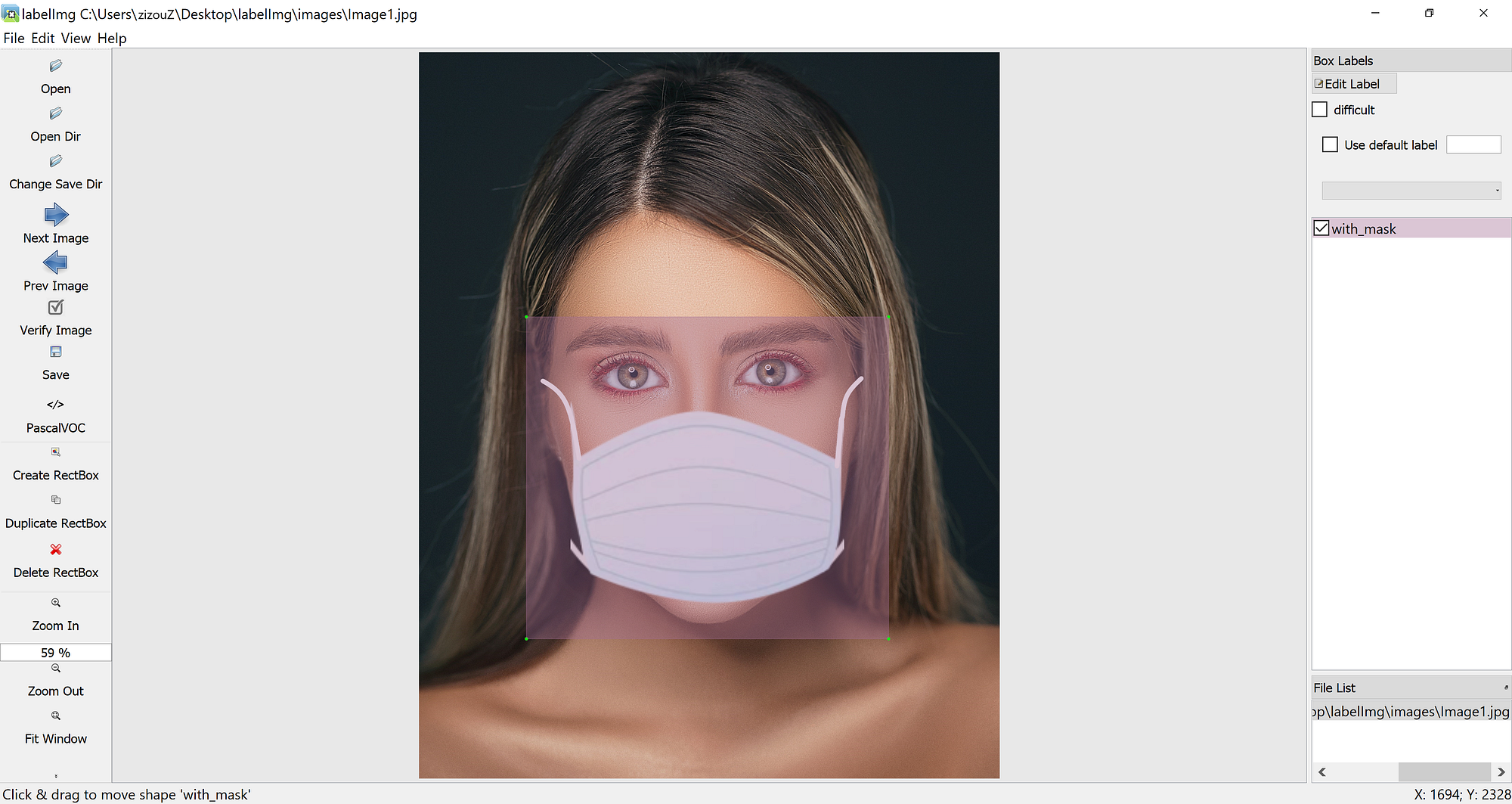

Labeling your Dataset

Input image example (Image1.jpg)

You can use any software for labeling like the labelImg tool.

Click on the link below to know more about the labeling process and other software for it:

NOTE: Garbage In = Garbage Out. Choosing and labeling images is the most important part. Try to find good-quality images. The quality of the data goes a long way towards determining the quality of the result.

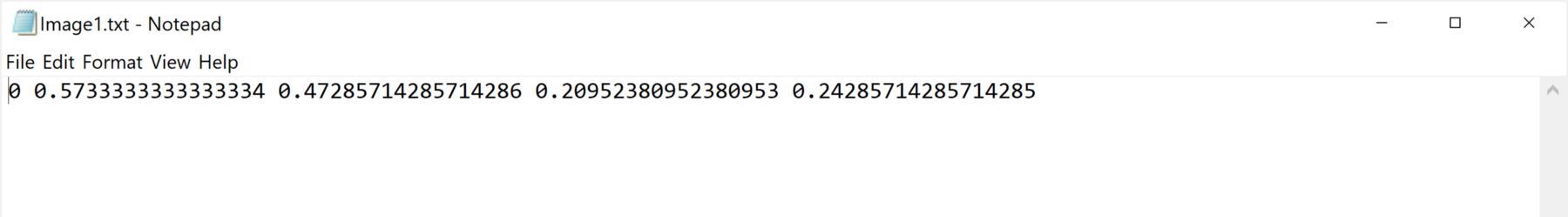

The output YOLO format TXT label file looks as shown below.

Unzip the obj.zip dataset and its contents

!unzip -q /mydrive/YOLOv6/obj.zip -d /mydrive/YOLOv6

Note: You can also use other methods to get your dataset like the curl command to download the dataset from Roboflow. Visit Roboflow and go to the Public Datasets tab for more datasets.

curl -L "https://public.roboflow.ai/ds/YOUR-API-KEY-HERE" > roboflow.zip; unzip roboflow.zip; rm roboflow.zip

*Since I already have a simple dataset archive ready I will be using that.

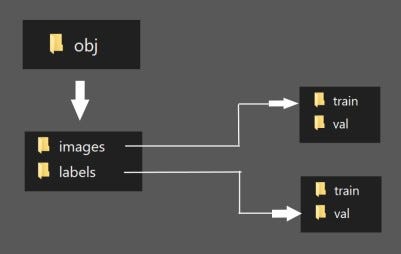

4(b) Split the dataset images and labels into train-val. Run the “process_yolov6.py” python script to create the train & val folders inside the images & labels folders

Here the train folder will have 80% of the dataset images and their labels while the val folder will have 20% of the dataset images and their labels

The split folders will be in the following order:

Run the process script

# run process_yolov6.py ( this creates the train and val folders inside the images and labels folder ) !python process_yolov6.py # list the contents of obj folder to check if the train and test folders have been created !ls obj

4(c) Create your data.yaml file and upload it to the YOLOv6 folder in your drive

This file contains the path to your train and test images. We created these 2 text folders in the previous step.

This file also contains the number of classes (nc) and their names.

data.yaml file is shown below:

# train and val data as 1) directory: path/images/, 2) file: path/images.txt, or 3) list: [path1/images/, path2/images/] train: /mydrive/YOLOv6/obj/images/train val: /mydrive/YOLOv6/obj/images/val # test: optional is_coco: False nc: 2 names: ['with_mask', 'without_mask']

4(d) Set the config file

(Define YOLOv6 Model Configuration and Architecture)

Next, we need a model configuration file for our custom object detector. For this tutorial, we will be using YOLOv6s. You have the option to pick from all the YOLOv6 models mentioned below:

- YOLOv6n

- YOLOv6s

- YOLOv6m

- YOLOv6l

- YOLOv6t

- You will find these inside the YOLOv6/configs folder. You can also edit the structure of the network in this step, though rarely will you need to do this. Also, edit the path of the pre-trained weights in the config file.

#from

pretrained=None,

#to

pretrained='yolov6s.pt', # download pretrain model from YOLOv6 github if use pretrained model

#we will download the pretrained weights in the next step 4(e)

- Here below is the YOLOv6s model configuration file, which I will edit and name as

custom_yolov6s.py

The default yolov6s.py config file is shown below (Edit the pretrained line from None to the path of your model weights and rename the file to custom_yolov6s.py)

# YOLOv6s model

model = dict(

type='YOLOv6s',

pretrained=None,

depth_multiple=0.33,

width_multiple=0.50,

backbone=dict(

type='EfficientRep',

num_repeats=[1, 6, 12, 18, 6],

out_channels=[64, 128, 256, 512, 1024],

fuse_P2=True,

cspsppf=True,

),

neck=dict(

type='RepBiFPANNeck',

num_repeats=[12, 12, 12, 12],

out_channels=[256, 128, 128, 256, 256, 512],

),

head=dict(

type='EffiDeHead',

in_channels=[128, 256, 512],

num_layers=3,

begin_indices=24,

anchors=3,

anchors_init=[[10,13, 19,19, 33,23],

[30,61, 59,59, 59,119],

[116,90, 185,185, 373,326]],

out_indices=[17, 20, 23],

strides=[8, 16, 32],

atss_warmup_epoch=0,

iou_type='giou',

use_dfl=False, # set to True if you want to further train with distillation

reg_max=0, # set to 16 if you want to further train with distillation

distill_weight={

'class': 1.0,

'dfl': 1.0,

},

)

)

solver = dict(

optim='SGD',

lr_scheduler='Cosine',

lr0=0.01,

lrf=0.01,

momentum=0.937,

weight_decay=0.0005,

warmup_epochs=3.0,

warmup_momentum=0.8,

warmup_bias_lr=0.1

)

data_aug = dict(

hsv_h=0.015,

hsv_s=0.7,

hsv_v=0.4,

degrees=0.0,

translate=0.1,

scale=0.5,

shear=0.0,

flipud=0.0,

fliplr=0.5,

mosaic=1.0,

mixup=0.0,

)

Copy the edited config file above to the YOLOv6 root folder

- Set the names of the files below according to the model you are using. I am using YOLOv6s model.

#Copy the yolov6s.py config file to your drive inside the YOLOv6 folder !cp configs/yolov6s.py /mydrive/YOLOv6/ #Rename the yolov6s.py file to custom_yolov6s.py !mv yolov6s.py custom_yolov6s.py #Change number of classes in the custom_yolov6.py !sed -i "s/pretrained=None/pretrained='yolov6s.pt'/" custom_yolov6s.py

4)e) Download the YOLOv6 pre-trained weights

For training, download the pre-trained weights file for your selected model whose path we gave in our custom config file. Since I am using the yolov6s model, I will download the weights for yolov6s.

!wget https://github.com/meituan/YOLOv6/releases/download/0.4.0/yolov6s.pt #wget https://github.com/meituan/YOLOv6/releases/download/0.4.0/yolov6n.pt #wget https://github.com/meituan/YOLOv6/releases/download/0.4.0/yolov6m.pt #wget https://github.com/meituan/YOLOv6/releases/download/0.4.0/yolov6l.pt #wget https://github.com/meituan/YOLOv6/releases/download/0.2.0/yolov6t.pt

Note: You have to download the weights for your model.

5) Load Tensorboard to visualize training metrics

%load_ext tensorboard

%tensorboard --logdir runs/train

6) Train the model

We can pass the following arguments in the training command:-

- img: define input image size. The default is 640. See this table for more info

- batch: determine batch size

- epochs: define the number of training epochs.

- data: set the path to our yaml file (data.yaml file)

- conf: specify our model configuration

- name: result names

- write_trainbatch_tb: It is a boolean argument indicating that we want to write the logs to TensorBoard(

--write_trainbatch_tb). - weights: specify the path to pre-trained weights. You can use the weights of whichever model you want to train.

— yolov6n.pt

— yolov6s.pt

— yolov6m.pt

— yolov6l.pt

— yolov6t.pt

We downloaded the above specified pre-trained weights in Step 4)e).

python tools/train.py --batch 32 --conf configs/custom_yolov6s.py --data data/data.yaml --fuse_ab --write_trainbatch_tb --device 0

Retraining from the last saved checkpoint

# single GPU training.

!python tools/train.py --resume

7) Test the trained model

Evaluate the model using the eval.py script.

!python tools/eval.py --data data/data.yaml --weights /mydrive/YOLOv6/runs/train/exp/weights/best_ckpt.pt --task val --device 0 --verbose

DETECTION ON IMAGES (Use infer.py script)

Run Detector

!python tools/infer.py --yaml data/data.yaml --weights /mydrive/YOLOv6/runs/train/exp/weights/best_ckpt.pt --source /mydrive/mask_test_images/image.jpg --device 0 --view-img

Display the image outputs

#display inference on ALL test images

import glob

from IPython.display import Image, display

for imageName in glob.glob('/mydrive/YOLOv6/runs/detect/exp/*.jpg'): #assuming JPG

display(Image(filename=imageName))

print("\n")

DETECTION ON VIDEOS (Use infer.py script)

Run Detector

!python tools/infer.py --source /mydrive/mask_test_videos/test.mp4 --yaml data/data.yaml --weights /mydrive/YOLOv6/runs/train/exp/weights/best_ckpt.pt --device 0

# 0 # webcam

# img.jpg # image

# vid.mp4 # video

# path/ # directory

# path/*.jpg # glob

# 'https://youtu.be/Zgi9g1ksQHc' # YouTube

# 'rtsp://example.com/media.mp4' # RTSP, RTMP, HTTP stream

(OPTIONAL STEP) CONVERSION

CONVERT TO ONNX, TensorRT MODEL

(Using the script files in YOLOv6’s repository here)

Convert using the scripts inside the YOLOv6/deploy directory

ONNX

# ONNX

!python deploy/ONNX/export_onnx.py --weights /mydrive/YOLOv6/runs/train/exp/weights/best_ckpt.pt --simplify --device 0

TensorRT (read more about this step here)

# TensorRT (TensorRT has to be converted on the same GPU it will be inferenced on)

!python onnx_to_tensorrt.py --fp16 --int8 -v \

--max_calibration_size=${MAX_CALIBRATION_SIZE} \

--calibration-data=${CALIBRATION_DATA} \

--calibration-cache=${CACHE_FILENAME} \

--preprocess_func=${PREPROCESS_FUNC} \

--explicit-batch \

--onnx ${ONNX_MODEL} -o ${OUTPUT}

That’s it for YOLOv6!

References

YOLOv6

https://github.com/meituan/YOLOv6

https://blog.roboflow.com/how-to-train-yolov6-on-a-custom-dataset/

https://github.com/meituan/YOLOv6/blob/main/turtorial.ipynb

https://github.com/meituan/YOLOv6/blob/main/docs/Train_custom_data.md