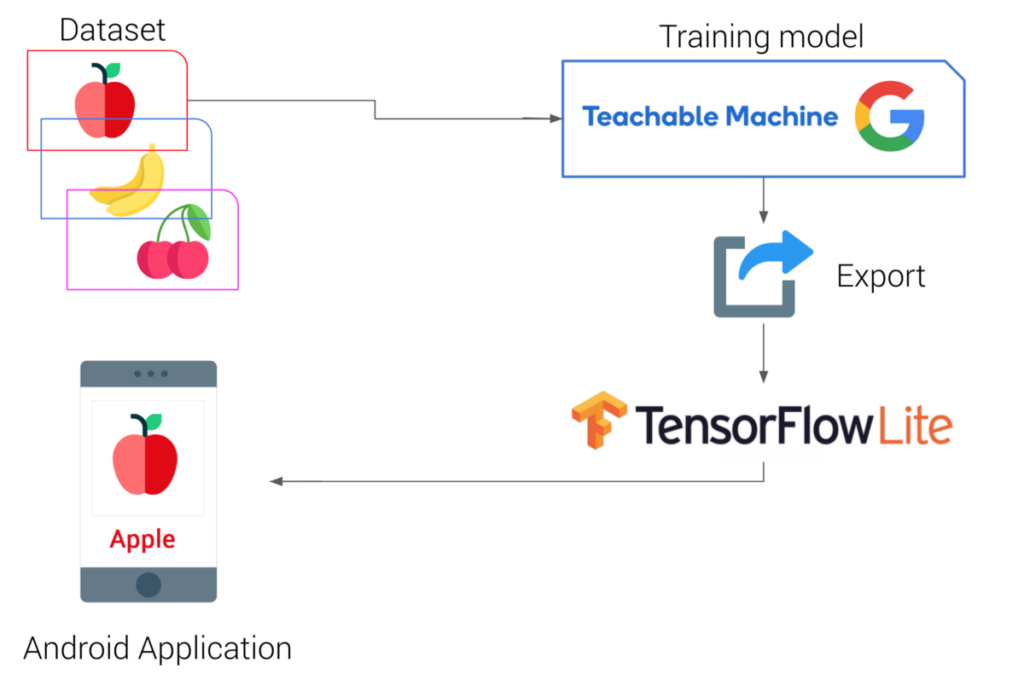

Train a Deep Learning model for custom object image classification using Teachable Machine, convert it to a TFLite model, and finally deploy it on mobile devices using the sample TFLite image classification app from TensorFlow’s GitHub.

IMPORTANT

THIS IS FOR AN OLDER JAVA VERSION. YOU CAN SWITCH TO PREVIOUS VERSIONS ON GITHUB. USE THE FOLLOWING LINK FOR THE JAVA VERSION REFERENCE APP USED IN THIS TUTORIAL:

https://github.com/tensorflow/examples/tree/demo/lite/examples/image_classification/android

Roadmap

- ⁍ Collect the dataset of images.

- ⁍ Open Teachable-Machine’s image project

- ⁍ Train the model.

- ⁍ Evaluate the model.

- ⁍ Export the model

- ⁍ Create TFLite model for Android

- ⁍ Download the sample image classification app from TensorFlow and adjust it for your custom TFLite model.

Image classification or image recognition is a concept in which you showcase an image to the camera sensor of the device and it will tell you what is present in that image or tell us which class it belongs to.

In this tutorial, I will walk you through the custom image classification by training a simple deep learning model with the help of an exciting online tool by google: teachablemachine with google, and then exporting the model to the TensorFlow lite version which is compatible with an Android device. Then, finally, we will deploy this model onto an Android device.

Model

The training platform used for training our custom image classifier is the teachablemachine with google. This is an exciting platform for learning the deep learning training process with just a very few clicks. First, by uploading the different classes of objects from your system or using a webcam, then training it. Finally, after training, you can export the model of your choice. You can choose whatever format you want and download the model. I am training a model for mask image classification. This will be done in the 5 steps mentioned in the section below. The first 3 steps are the same as in the Train image classification model using Teachable Machine tutorial.

- Upload your dataset

- Train the model

- Preview the model / test the model using javascript

- Export the model in TFLite format

- Download the TFLite model and adjust the TFLite Image Classification sample app with your custom model

Requirements

- Your custom object image dataset with different classes.

- Android Studio 3.2 + (installed on a Linux, Mac, or Windows machine)

- Android device in developer mode with USB debugging enabled

- A USB cable (to connect the Android device to your computer)

LET’S BEGIN !!

( But first ✅Subscribe to my YouTube channel ???????? https://bit.ly/3Ap3sdi ????????)

Objective: Build a custom object Image classification android app using Teachable Machine.

To start, go to the Teachable-Machine site.

NOTE: TeachableMachine only has support for MobileNet model as of now.

Click on Get Started on the homepage and choose Image Project. You can also sign in using your google drive to save your model files otherwise you will lose all your images and model files if you close the tab or refresh the page.

Step 1. Upload your dataset

Create and upload the dataset to the teachablemachine and define the names of the classes accordingly. I am training a model for mask image classification with 2 classes viz., “with_mask” & “without_mask“.

Step 2) Train the image classification model

During training, you can tweak the hyperparameters like:

- No of epochs

- Batch size

- Learning rate

Click on Train Model after setting the values of the hyperparameters. This process will take a while depending on the number of images and epochs.

Important: During training, do not switch tabs as it will stop the training process.

Step 3) Preview your trained model or test the model using javascript

Once the training is over, you can preview your trained model in the preview window. You can either upload images to test or you can test using a webcam. See ‘preview’ test results for both below.

USING WEBCAM

USING IMAGES

You can also test the model using javascript.

You can use your model for browser-based projects using the javascript format.

First, click on Export model. Next, go to the Tensorflow.js tab and click on Upload my model. When you upload your model, Teachable Machine hosts it at the given shareable link for free. You can share that link with anyone if you’d like them to use your model—anyone who has that link can use your model in their projects. Note that your model is published to Google servers, but the examples you used to make the model are not. Just your model — the mathematical program that predicts which class you’re showing it.

You will see the Javascript code snippet with your model’s link written in it. You can use this code to test your model on a browser.

You can also test your model online using the p5.js web editor tool. The web editor for p5.js is a JavaScript library with the goal of making coding accessible to artists, designers, educators, and beginners. To use it for your custom-trained model, select the p5.js code snippet tab and copy the code. Next, click on the p5.js Web Editor link shown in the pic below.

Once you open the p5.js web editor tool you will find the default code there for daytime and nighttime classification. Remove the entire old code and paste the new code you copied from your custom model’s p5.js code snippet and press play. Now, you can test your model using the p5.js web editor tool.

Step 4) Export the model in TFLite format

Finally, export the model in the form of TensorFlow lite format to deploy on mobile devices.

Click on Export Model and select the TensorFlow Lite tab. Next, choose the model format you want. You can download the quantized as well as the floating-point file format of TFLite.

NOTE: Teachable Machine only supports MobileNet model architecture as of now.

The .tflite file should be placed in the asset folder of the android project directory and change the name in the java file which is reading it accordingly(See Step 5)

Step 5) Download the TFLite model and adjust the TFLite Image Classification sample app with your custom model

(Build the app using Android Studio as described in the link below:

- ⦿ Download the old version TensorFlow Lite examples archive from here and unzip it. You will find an image classification app inside

examples-master\lite\examples\image_classification

- ⦿ Next, copy your TFLite models and the labels.txt file inside the assets folder in the object detection Android app.

examples-master\lite\examples\image_classification\android\models\src\main\assets

Switch between implementation solutions (Task library & Support Library)

This Image Classification Android reference app demonstrates two implementation solutions:

(1) lib_task_api that leverages the out-of-box API from the TensorFlow Lite Task Library;

(2) lib_support that creates the custom inference pipeline using the TensorFlow Lite Support Library.

Inside Android Studio, you can change the build variant to whichever one you want to build and run—just go to Build > Select Build Variant and select one from the drop-down menu(as shown in the pic below). See configure product flavors in Android Studio for more details.

Note: If you simply want the out-of-box API to run the app, the recommended option is lib_task_api for inference. If you want to customize your own models and control the detail of inputs and outputs, it might be easier to adapt your model inputs and outputs by using lib_support.

IMPORTANT:

The default implementation is “lib_task_api”. For beginners, you can choose the “lib_support” implementation as it does not require any metadata while the lib_task_api implementation requires metadata. If you are using lib_task_api you can create the metadata using the script in the metadata folder inside the Image Classification Android app we downloaded. You need to install certain packages for it as mentioned in the requirements.txt file and also adjust the model info and its description according to your requirements. Use the command in the link below to create metadata for it.

You can also use this colab notebook below to create metadata:

Also, check out the following 2 links to learn more about metadata for image classifiers.

- • https://www.tensorflow.org/lite/convert/metadata

- • https://www.tensorflow.org/lite/convert/metadata_writer_tutorial#image_classifiers

⦿ NOTE: I am using the lib_support implementation here which requires no metadata.

⦿ Next, make changes in the code as mentioned below.

- ➢ Edit the gradle build module file. Open the “build.gradle” file $TF_EXAMPLES/examples-master/lite/examples/image_classification/android_play_services/app/build.gradle to comment out the line apply from: ‘download_model.gradle‘ which downloads the default image classification app’s TFLite model and overwrites your assets.

// apply from:'download_model.gradle'- ➢ Select

Build -> Make Projectand check that the project builds successfully. You will need Android SDK configured in the settings. You’ll need at least SDK version 23. Thebuild.gradlefile will prompt you to download any missing libraries.

- ➢ Next, give the path to your TFLite models

model.tflite & model_quant.tflite, and your labels filelabels.txt. To do this, open up theClassifierFloatMobileNet.java&ClassifierQuantizedMobileNet.javafiles in a text editor or inside Android Studio itself and find the definition of getModelPath(). Verify that it points to their respective TFLite models(model.tflite & model_quant.tflite in my case). Next, verify that the path to labels getLabelPath() points to your label file “labels.txt“. (The above two java files for the lib_support implementation are inside the following path$TF_EXAMPLES/lite/examples/image_classification/android/lib_support/src/main/java/org/tensorflow/lite/examples/classification/tflite/)

- ➢ If you also have the quantized TFLite model along with the Floating-point TFLite model for EfficientNet we can do the same step as above and set the getModelPath() and getLabelPath() in all the classifiers point to their respective models. Note that you need to have all 4 types of models for your custom dataset to run the app otherwise it will give an error. However, in this tutorial, since I am only demonstrating the Floating-point & quantized models for only MobileNet, therefore I will remove the usages and all references of the other 2 EfficientNet classifiers in Android Studio.

- ➢ I have also made a few other changes in the code since I am using only 2 classes and the default number of classes displayed in the sample Image Classification app is 3 so I have adjusted it for my requirements. I have shared my custom Android app for 2 classes. You can find the link to that down at the bottom of this article.

- ➢ Finally, connect an Android device to the computer and be sure to approve any ADB permission prompts that appear on your phone. Select

Run -> Run app.Test your app before making any other new changes or adding more features to it. Now that you’ve made a basic Image classification app using Teachable Machine, you can try and make changes to your TFLite model or add any customizations to the app. Have fun!

Read the following TensorFlow image classification app GitHub Readme and Explore_the_code pages for the old java version to learn more->

Mask dataset

Check out my Youtube Video on this

CREDITS

Documentation / References

- ▸ Tensorflow Introduction

- ▸ Tensorflow Models Git Repository

- ▸ TensorFlow 2 Classification Model Zoo

- ▸ TensorFlow Lite sample applications

- ▸ TensorFlow Lite image classification Android example application

- ▸ Tensorflow tutorials

- ▸ Integrate image classifiers

- ▸ Adding metadata to TensorFlow Lite models

- ▸ TensorFlow Lite Metadata Writer API

- ▸ Create metadata command

- ▸ TensorFlow Lite image classification Android example application/Readme

- ▸ TensorFlow Lite Android image classification example/Explore_the_code